What time is it?! Well, I have no idea what time it is while you’re reading this, but I CAN tell you the time on the Doomsday Clock… which back in 2020 was set to 100 seconds to midnight (“midnight” = world apocalypse). For reference, this is the closest to “midnight” that the Doomsday Clock has been set since its inception back in 1947, when it was started by Einstein and a few other scientists from the Manhattan Project to gauge how close we are to nuclear apocalypse. Don’t worry, the world isn’t actually ending in the next 100 seconds (as far as I know). These days, the Doomsday Clock has some other aspects thrown in, like climate change and biosecurity threats, but nuclear risk still remains a harrowing concern to our world. Compared to other global threats, the risk of nuclear apocalypse is relatively new due to the nascence of nuclear technology within the last 100 years. Having a healthy fear of nuclear technology seems to be a good thing, but nuclear technology has also proven to be beneficial. This week, we’ll take a closer look at the history of nuclear technology (primarily not-so-nice nuclear weaponry), some of the alternative uses of nuclear technology, and what appear to be some of the future developments in the area. So no… this Grow Weekly article does not provide a guide for surviving a nuclear apocalypse. You’re on your own if that actually happens.

A Short History of Nuclear Technology

t’s difficult to select a “starting point” for when nuclear technology really came into its own. Albert Einstein published a paper in 1905 that theoretically related mass and energy (the famous e=mc2), which is a basis for the immense amounts of energy generated in nuclear reactions. We could also go a bit further back and talk about Pierre and Marie Curie who experimented with radioactive elements and coined the term “radioactivity” in the 1890s. We’ll start a bit further along, though, and jump to 1938… conveniently one year before World War II broke out. Note that I do skim over a few historic developments, because otherwise this timeline would be longer than your attention span.

1938: German scientists Hahn and Strassman consult with Austrian scientist Meitner and her nephew (Otto Frisch) regarding an experiment in which Uranium atoms were split by firing neutrons at them. Based on the initial and resulting masses in the experiment, they confirm Einstein’s theory of e=mc2 and prove that nuclear fission occurred. Nuclear fission is when the nucleus of an atom is split, releasing energy and often radioactive elements.

An illustration of the Uranium-235 nuclear fission reaction.

1939: A number of developments in nuclear technology occurred this year in history. Among them include the idea that the Uranium-235 isotope is more likely to undergo fission compared to the more prevalent Uranium-238 isotope. For reference, Uranium-235 makes up less than 1% of natural Uranium metal. Scientists also formulate the concept of “critical mass”, which defines the required amount of radioactive material required to create a self-sustaining reaction. This idea of critical mass is important in controlling nuclear reactors for energy generation, or for uncontrolled reactions in a nuclear explosion.

1942: A group of scientists at the University of Chicago led by Enrico Fermi develop a self-sustaining, small-scale nuclear reactor on a squash court on the university campus. They call the reactor “Chicago Pile-1”. This is the year that the United States Army takes over development of “fissionable” materials… basically purifying Uranium-235 or Plutonium-239 to get closer to the critical mass necessary for a nuclear bomb. Queue the Manhattan Project; Soviet, US, and German spies; and basically the beginnings of the Cold War.

An image of Chicago Pile-1 at the University of Chicago

1945: Enough Plutonium-239 and purified Uranium-235 had been produced in the United States to create a bomb during this year. Much of the Uranium used for the bomb in the United States was obtained from the Belgian-occupied Congo. The first atomic bomb based on Plutonium was then tested in Alamagordo in the state of New Mexico in July. A Uranium-based bomb was never tested prior to one being dropped on the city of Hiroshima on August 6th. The second bomb, a Plutonium-based atomic bomb, was dropped on the city of Nagasaki three days later. World War II ends. This is also the year that the Soviet Union ramps up to production-scale for refining of Plutonium-239 and Uranium-235.

1946-1963: Following World War II, the United States, Soviet Union, France, the United Kingdom, and China become nuclear powers (in possession of nuclear weapons). More nuclear weapon tests occur, including those using the newly developed hydrogen bomb – a thermonuclear weapon that makes use of both nuclear fission (with Uranium and Plutonium) and nuclear fusion (the fusing of Hydrogen atoms… just like inside the Sun). The United States also develops its first nuclear powered submarine, the USS Nautilus, in 1954. Some of the focus did shift towards harnessing nuclear power for energy production during this time as well. The first experimental nuclear reactor to produce a tiny amount of electricity was at Argonne National Laboratory in 1951. In 1954, the world’s first large-scale nuclear-powered electrical generator began operation in Obninsk of the Soviet Union. In 1963, the Limited Test Ban Treaty (LTBT) is signed to ban nuclear weapons tests, except those conducted underground. France and China did not sign this agreement.

The Shippingport nuclear reactor in Pennsylvania, finished in 1957, was the first commercial nuclear reactor used for electricity production in the United States.

1960s-Early 1970s: The Non-Proliferation Treaty (NPT) of 1968 is signed to ban nuclear armament in countries that did not already possess nuclear weapons and to encourage nuclear disarmament and reduction of weapons testing. This treaty is not signed by every country, which is obvious since India, South Africa, Pakistan, North Korea, and Israel then developed nuclear weapons capabilities. As technology develops, nuclear power generation begins to spread to other countries and private companies, such as the country of Kazakhstan.

1970s-early 1980s: Public enthusiasm for nuclear energy begins to decline for various reasons, including the Three Mile Island nuclear incident in Harrisburg, Pennsylvania occurring in 1979 (did not result in direct deaths, but too close for comfort for many) as well as issues in the management of radioactive waste materials.

1986: This list wouldn’t be complete without mentioning the Chernobyl disaster in the Soviet Union in August of this year. Sometimes considered to be one of the greatest human-made disasters in history, this nuclear accident resulted in the release of a large amount of radioactive uranium. Some researchers have estimated that around 4,000 people exposed to high levels of radiation from the event died of radiation-related cancer, including numerous incidences of thyroid cancer in children and adolescents in areas nearby. An “exclusion zone” around the plant will likely not be habitable for thousands of years due to the long-term impacts of radiation (although a number of animals have moved into the area around the reactor). This accident resulted in even less enthusiasm for nuclear power, though nuclear plants were still being built.

The number of operable nuclear reactors begins to level off in the mid to late 1980s.

1990s: In 1996, the United Nations adopts the Comprehensive Nuclear-Test-Ban Treaty (CTBT) in an effort to stop all nuclear testing… the United States, India, Pakistan, Iran, China, and Israel are among the countries that do not ratify the treaty. India and Pakistan demonstrate successful nuclear weapons tests in 1998, which raises concerns due to historic conflict between the two nations.

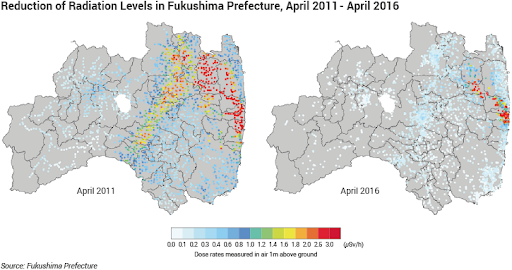

2011: The Fukushima Daiichi nuclear accident occurs in March due to a tsunami following an earthquake (the reactors were fine and dandy during the earthquake itself). Radiation is released in the form of a few different radioactive elements with a half-life of a couple days to a couple decades (at least it’s not 1,000 years!).

While we do seem to hear plenty about the terrors of nuclear power from accidents such as Chernobyl or Fukushima, nuclear power is still well and alive around the world today. It supplies about 10% of the world’s electricity! New methods of harnessing nuclear fission for energy are also still being developed and refined, as well as the endless pursuit towards effectively harnessing the power of nuclear fusion (combining Hydrogen atoms instead of breaking Uranium and Plutonium or other heavy elements) for electricity generation. You can still easily see the effects of nuclear armament on the world today through geopolitical “touchy” topics such as the Iran Nuclear Deal in 2016 and North Korea’s nuclear weapons testing.

Other Uses of Nuclear Technology

Nuclear weapons cause quite a commotion politically, and nuclear energy has created concerns due to past accidents and breakdowns in safety measures. Besides these two more apparent uses of nuclear technology, there are actually a number of other uses that are extraordinarily helpful in society today. Some of these uses are covered in more detail below...

Radiocarbon Dating: This technology was proposed by Willard Libby back in 1946, and it is adored by archaeologists everywhere (actually I have no clue how archaeologists feel about radiocarbon dating). Radiocarbon dating makes use of the radioactive Carbon-14 isotope. Whereas Carbon atoms typically come in the form of stable Carbon-12, the radioactive Carbon-14 isotope is formed naturally in the atmosphere. Just through environmental processes such as photosynthesis and eating, living organisms actually take up this radioactive Carbon-14 from the atmosphere (which is in low levels and doesn’t harm humans). Once the living thing dies, though, it stops taking in Carbon-14. Carbon-14, just like other radioactive elements, then slowly goes through radioactive decay with a known half-life around 5,730 years. This means that measuring the amount of radioactive Carbon-14 in a dead carbon-containing substance can give you an idea of when that thing died. This idea of radiocarbon dating has been used to determine the age of fossils as well as human artifacts from civilizations that were around thousands of years ago.

Radiotracers: Just as we can use radioactive Carbon-14 to track the age of something, we can use other radioactive elements to track the movement of chemicals in the environment… and in our own bodies. Remember that radioactive atoms are still the same element - Carbon-14 is radioactive and emits traces of radiation, but at the end of the day it’s still Carbon. Due to this, we can let loose a few radioactive atoms into the environment and then trace where they naturally go by tracking the radiation emitted. For example, releasing small levels of Mercury-203 isotope can help scientists understand how mercury contaminants travel through an ecosystem, from water into organisms and up through the food chain. The radioactive Mercury-203 acts the same as the common stable Mercury element, but it can be easily traced due to its radioactivity. Only a tiny amount is needed, so the benefits of newfound understanding typically outweigh the costs of “intentional” pollution.

A similar idea is used on the scale of the human body. The common medical imaging technique of Positron Emission Tomography (PET) typically makes use of the radioactive Fluorine-18 isotope attached to a sugar molecule (called fluorodeoxyglucose if you’re interested), although other radioactive tracers may be used. Since sugar is processed and used for energy in the human body, doctors can trace the radiation coming from the radioactive Fluorine-18 to see which parts of the human body are using more or less energy in the form of sugar. Typically, cancers use more energy than normal, and blockages in blood vessels can be seen as areas of lower sugar metabolism. This concept of tracing radioactive elements as they go about their business can also be applied to molecules other than sugar as they get processed in the human body.

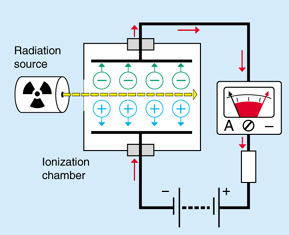

Basic concept of an ionization-type smoke detector where charged radiation completes an electric current

Smoke detectors: Yes, some smoke detectors are radioactive. More accurately, they contain a tiny amount of radioactive Americium-241 isotope. These radioactive types of smoke detectors are called ionization-type detectors. They function through the Americium-241 emitting positively charged radiation that creates a measurable electric current in normal air. When smoke is present in the air, the measurable electric current (created by radiation) is noticeably decreased, resulting in an alarm. At this point, open the window because you burned your dinner again… or it’s an actual fire and you need to get out of there NOW.

Killing: Relax, I’m talking about killing microorganisms and even cancer cells. Radiation, while tolerable in small doses to humans, can certainly cause death in all sorts of organisms. By harnessing this radiation in a controlled way, though, we can kill organisms where it counts. Radioimmunotherapy, or radiotherapy, is a method where a radioactive atom is attached to a specific targeting molecule that is able to recognize and attach to cancer cells (typically a monoclonal antibody if you’re into this sort of stuff). When injected into the body, the targeting molecule with the radioactive atom binds to the specific cancer cell, and then the radioactive atom that was along for the ride does its thing and releases deadly radiation to kill the cancer cells.

There are still plenty of other uses for nuclear technology not discussed here, such as radioluminescence (think of that classic green glow from radioactive materials), but I’ll leave that up to you to explore.

The Path Ahead

Why stop there? After all, there’s still plenty of room for advancement in the realm of nuclear technology. Well, yes… but also no. Nuclear technology presents some incredible opportunities along with some genuine concerns as we proceed forward.

One of those opportunities is harnessing nuclear fusion for energy generation. While we’ve been able to invent the Hydrogen bomb as a weaponized form of nuclear fusion… we still have not been able to figure out how to apply that to reasonably generate electricity. For reference, nuclear fusion (combining atoms) generates more energy than nuclear fission (breaking atoms), and it is the same process used by the sun to create energy. Instead of using solar panels to capture the sun’s energy, why not just make our own mini sun? To achieve nuclear fusion, an immense amount of energy and pressure is needed to jump-start - and contain - the reaction. Nuclear fusion reactors attempt to combine deuterium (Hydrogen-2 isotope) and tritium (Hydrogen-3 isotope) under high temperature and pressure. This is achieved in the Sun through gravitational forces, which isn’t as feasible here on Earth. A few different nuclear fusion reactor designs are being pursued concurrently:

Tokamaks: This form of nuclear fusion reactor was first designed in 1951. It is developed in a toroidal shape and uses an extremely strong magnetic field to force the two hydrogen isotopes (deuterium and tritium) to fuse together. The International Thermonuclear Experimental Reactor (ITER) is a newer tokamak fusion reactor being built in France with a projected completion date in 2025.

A picture of the International Thermonuclear Experimental Reactor (ITER) being built in France. This will be the world’s largest tokamak nuclear fusion reactor when it eventually operates - hopefully starting in 2025.

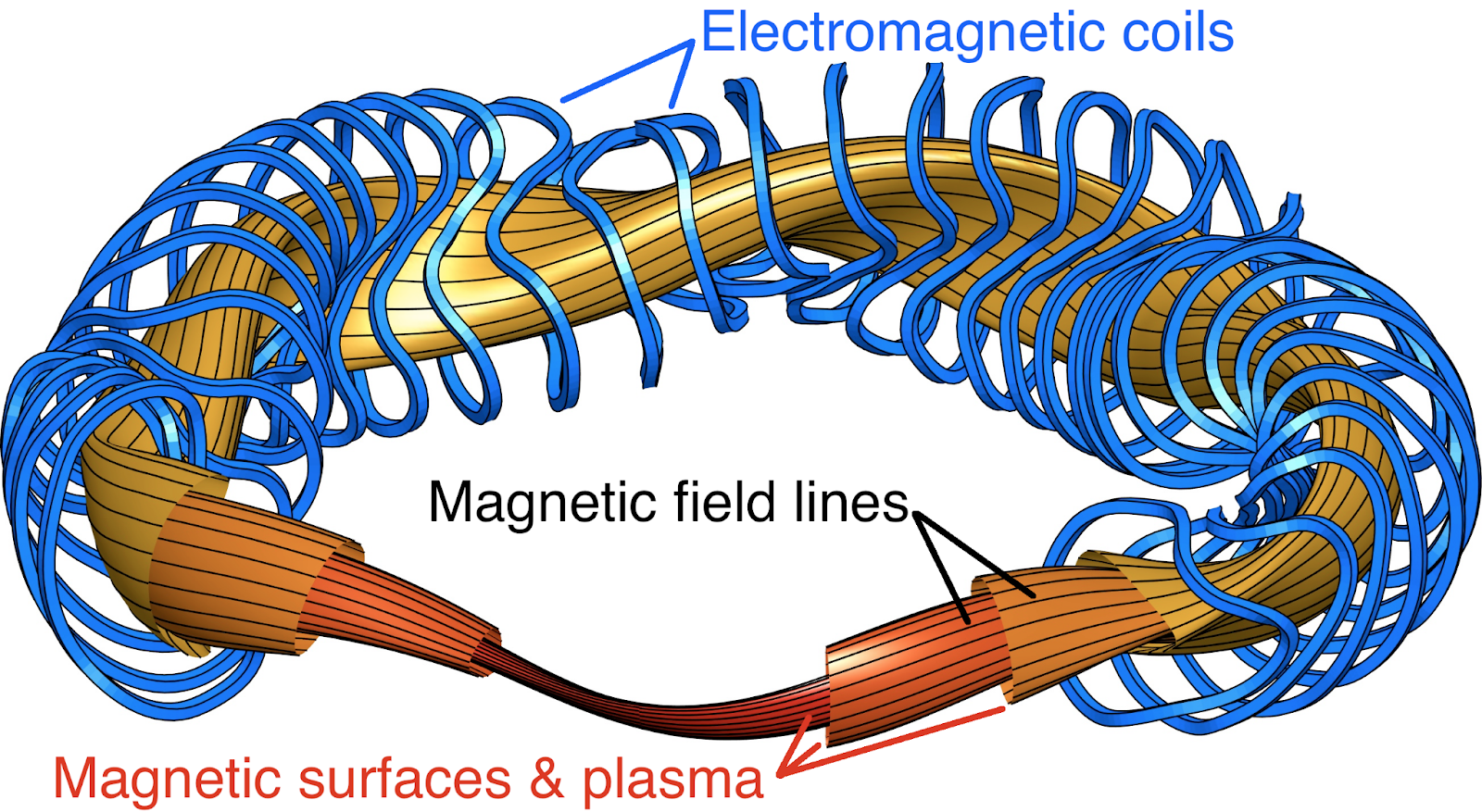

Stellarators: Similar to tokamaks since they make use of magnetic fields to start and contain fusion reactions. This form of fusion reactor makes use of a weird helical geometry to better control the magnetic field and stabilize the internal current in the reactor.

Conceptual model of a stellarator nuclear fusion reactor

Inertial confinement: Lasers or ion beams are shot at a small pressurized pellet of fusion fuel (deuterium and tritium). This creates the conditions to spark ignition of nuclear fusion. Lawrence Livermore National Laboratory (LLNL) in California completed such a facility for nuclear fusion back in 2009.

Among a few others and hybrid technologies not discussed here for the sake of brevity…

One concern with nuclear fusion is the handling of tritium (Hydrogen-3 isotope) since it is difficult to contain and is radioactive. This concern for radiation exposure is shared with nuclear fission reactors using Uranium and other radioactive isotopes. Radioactive wastes and materials generated in some of these reactions have long half-lives and take a while to become “not-radioactive”. For example, tritium has a half-life of 12.3 years, so it takes about 125 years to deteriorate enough to be considered a low risk to human health. Creating and managing materials that will remain dangerous for years, decades, or even centuries is somewhat disconcerting, although we can also consider that some plastic products take just as long to degrade in a landfill.

Radioactive wastes are typically managed in one of two ways. One way is through recycling, which is feasible with a large percentage of used Uranium in nuclear reactors for example. Here, the used Uranium fuel, including some Plutonium byproduct now, can be reprocessed and added into new Uranium fuel rods to be reused. Another management approach is to basically bury radioactive waste underground until it’s deteriorated enough to no longer be as harmful to humans. This idea of disposing of radioactive wastes through burial is an issue seen in the constantly delayed construction and use of Yucca Mountain in Nevada as a radioactive waste disposal site.

The entrance to the underground radioactive waste storage site in Yucca Mountain.

Other methods have also been explored for radioactive waste disposal, such as launching it into space where we don’t have to deal with it (but this was cost-prohibitive and is not used today).

As the use of nuclear technologies continues to prosper, the world continues to grapple with how to properly deal with the technology’s incredible opportunities and dangers. I guess a key takeaway here is that with great power comes great responsibility. And with that… I think it’s time to go build my nuclear fallout shelter and stock it with water bottles and peanut butter.

To Think About…

Would you rather have to deal with a nuclear apocalypse or a zombie apocalypse? Just kidding, but actually do you think there is a high risk for nuclear apocalypse based on the current state of world politics?

Does your local electric power utility use any energy generated from nuclear power plants? Can you think of nuclear technologies that you’ve used or interacted with recently? Keep in mind that the technologies listed in this article are not fully comprehensive of available nuclear technologies (some more are listed here: https://www.world-nuclear.org/information-library/non-power-nuclear-applications/overview/the-many-uses-of-nuclear-technology.aspx)

Do you think the benefits of nuclear technologies and nuclear energy generators outweigh the potential costs and dangers?

Sources

https://thebulletin.org/doomsday-clock/

https://www.nationalgeographic.com/culture/article/chernobyl-disaster

https://www.atomicheritage.org/history/chicago-pile-1

http://chemed.chem.purdue.edu/genchem/topicreview/bp/ch23/fission.php

https://www.energy.gov/sites/prod/files/The%20History%20of%20Nuclear%20Energy_0.pdf

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4165831/

https://www.world-nuclear.org/information-library/current-and-future-generation.aspx

https://advances.sciencemag.org/content/5/10/eaay5478

https://www.snmmi.org/AboutSNMMI/Content.aspx?ItemNumber=29736

https://www.itnonline.com/article/what-pet-imaging

http://large.stanford.edu/courses/2011/ph241/eason1/

https://www.frontiersin.org/articles/10.3389/fmars.2020.00406/full

https://www.iaea.org/sites/default/files/publications/magazines/bulletin/bull54-3/54305610708.pdf

https://www.acs.org/content/acs/en/education/whatischemistry/landmarks/radiocarbon-dating.html

https://knpr.org/knpr/2019-11/house-yucca-mountain-advocate-retiring-exiting-nuke-waste-fight

https://terpconnect.umd.edu/~mattland/projects/1_stellarators/

https://www.foronuclear.org/en/updates/news/iter-opportunities-for-industry-research-and-innovation/